📈 Optimization or optimisation?, Multi-Objective learning to rank, Minimum Cut

Local Optimum: short, imperfect-yet-useful ideas - Edition #17

Welcome to a new edition of Local Optimum: a short, imperfect-yet-useful collection of ideas related to optimization, decision-making, and applied Operations Research.

Let’s dive in! 🪂

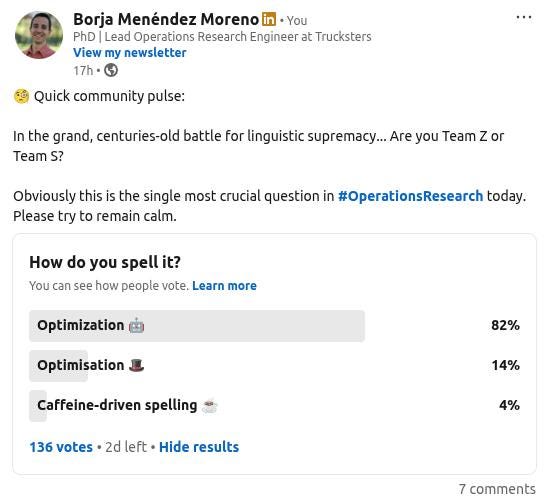

1) 🔀 Optimization or optimisation?

As you may already know, I tend to use optimization.

In fact, I never use optimisation.

It’s like I feel that Team Z resonates more. But I’ve seen it written both ways and some people are also in Team S, so I wanted to know how many of us are in the correct path of OR history 😉

How do you spell it?

(click in the image to go to the poll)

2) 🤹🏻 Multi-Objective learning to rank

Amazon has recently released MO-LightGBM: A Library for Multi-objective Learning to Rank with LightGBM.

It’s the first open-source framework that brings multi-objective optimization capabilities into LightGBM’s gradient-boosted decision trees, enabling you to optimize ranking models against multiple criteria in one go.

Why would you care?

There are some applications here, but as I’m focused lately in the hybridization of Machine Learning and Operations Research, I’d say i) warm-start solvers by predicting good initial solutions (à la GNN as we saw the other day), ii) predict good branching decisions for MIP solvers (if you’re building one or creating your own heuristics), iii) bias neighborhood selection toward promising regions of the solution space (for metaheuristic guidance).

If you want to read the paper, here you have it.

3) ✂️ Minimum cut

I love when big companies succeed in improving the way we solve problems or give you new tools for that.

That’s why every time I see Google, Amazon, NVIDIA and the like propose new methods, I actively promote them.

Some months ago, Google proposed a new algorithm to solve the Minimum Cut Problem with a 3-step process:

Cut-preserving graph sparsification

Connect the Minimum Cut Problem with low-conductance cuts

Partition the graph into well-connected clusters

Research has been conducted on this problem for more than 7 decades, and it is now when we have a very efficient and generalist algorithm to solve large-scale problems.

Read more here:

Adding more hardware will improve your solutions?

I’m continuing the Where did the time go? series of posts this next Monday.

Remember this series of posts cover different aspects of tractability issues in optimization problems.

This time, we’ll cover:

🤔 Why we’ve been stuck with CPUs

🏎️ What faster hardware is on the shelf?

🎯 Extra computational power: what can we still do?

If you want to understand how modern hardware fits into your optimization problems, this will be useful. See you Monday!

And that’s it for today!

If you’re finding this newsletter valuable, consider doing any of these:

1) 🔒 Subscribe to the full version: if you aren’t already, consider becoming a paid subscriber. You’ll get access to the full archive, a private chat group, and 30% off new products.

2) 🤝🏻 Collaborate with Feasible. I’m always looking for great products and services that I can recommend to subscribers. Also, if you want to write an article with me, I’m open to that! If you are interested in reaching an audience of Operations Research Engineers, you may want to do that here.

3) 📤 Share the newsletter with a friend, and earn rewards in compensation. You’re just one referral away from getting The Modern OR Engineer Playbook: Mindset, methods, and metrics to deliver Optimization that matters.

If you have any comments or feedback, just respond to this email!

Have a nice day ahead ☀️

Borja.