📈 #79 Where did the time go? Part III: structure (Part A)

From beautiful patterns to brutal plateaus: how symmetry kills performance

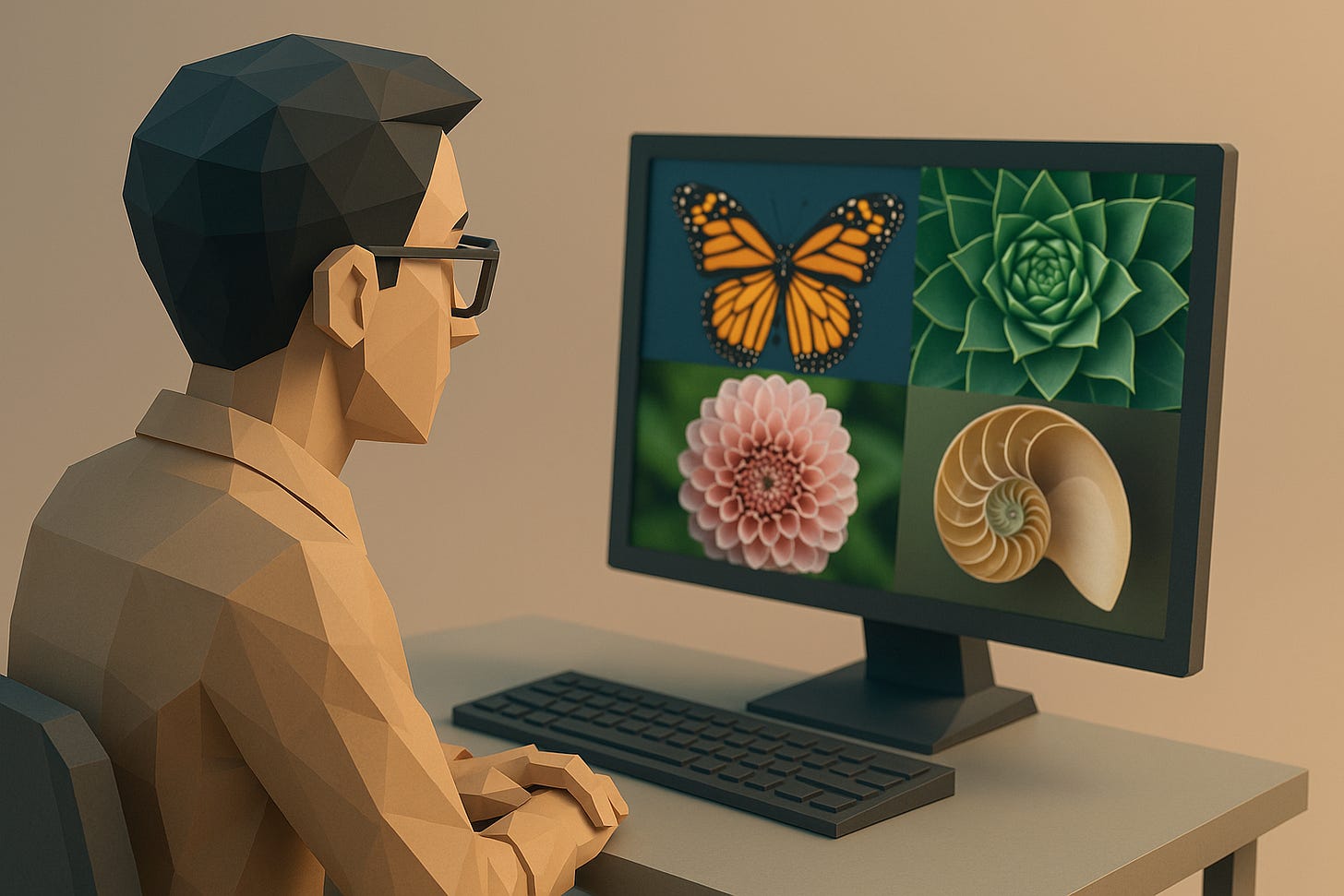

In nature, symmetry makes things beautiful.

It’s a principle of balance and regularity, and it appears in animals, plants, inorganic structures, and celestial objects equally.

While asymmetry exists, symmetrical patterns appear more frequently than expected by chance, suggesting a natural inclination towards repetition and balance.

And it’s an important feature as it reminds us about:

⚙️ Efficiency: symmetrical structures can be more efficient in terms of resource allocation and development.

🧘 Stability: symmetry can contribute to the stability and resilience of structures.

🦋 Evolutionary advantage: symmetrical forms may have an advantage in attracting mates or evading predators.

🏭 Information processing: repeating patterns and structures can simplify design and manufacturing processes.

As in nature, we also find symmetries in optimization problems.

We can see problems where it doesn’t really matter if you assign values to some resources or others, resources that might be interchangeable, and similar data that gives you completely equivalent solutions.

But opposed to the idea of symmetry in nature, these symmetries in optimization problems become an issue.

They make the search space artificially bigger, complicate the way we compare solutions, and thus make it harder to find the best possible solution.

On top, they compound with large-scale problems, making already-difficult-to-solve problems even much more difficult, consuming much more memory than really needed, and slowing down the time for finding optimal solutions as there are multiple repeated solutions over the search space.

Symmetries are a real problem.

And the bigger the problem, the bigger the pain.

That’s because symmetries scale factorially; and even small, seemingly innocent ones can multiply the number of paths the solver explores. As problem size increases, solving times can explode, not because of model size, but because the solver keeps exploring the same thing from different angles.

This topic turned out so big I had to split it into two parts. Not just because I needed to write a lot, but also because I felt it was going to be so long that you would feel overwhelmed.

In today’s Feasible article we’ll explore:

🧱 What symmetry means in optimization: a more formal definition of symmetries, why they’re a computational burden, and types of symmetries

🔬 Classical techniques to break symmetries

In Part B -for 🔐 paying subscribers 🔐- we’ll go deeper into the more advanced techniques that some solvers already implement, explore how machine learning can help you break symmetries before solving, and share practical steps (and warnings!) for your own models.

💡 Like where this is going? Click here to get Part B and the rest of the series delivered straight to your inbox:

Remember: this is the third part of the 6-part series of Where did the time go?; in case you missed it, here are Part I: benchmark before you fix and Part II: scale.

Are you ready? Let’s dive in… 🪂

🧱 What symmetry means in optimization

In optimization problems, symmetry means you have multiple equivalent ways to describe the same solution.

That sounds harmless, even elegant.

But to a solver, it’s a trap.

Solvers like CP, MIP, or SAT don’t know two solutions are “the same.” So they waste time exploring redundant branches, stalling at the root or looping through mirrored configurations.

That’s why symmetry is not just a modeling curiosity. It’s a tractability killer.

So what’s this exactly? Why is that a computational burden? What types of symmetries can you find?

Let’s break that down.

🪞 A more formal definition of symmetries

A symmetry in optimization is a transformation that preserves the solution structure.

If you can swap, flip, or reorder parts of your problem and get the same objective value, you've found a symmetry.

I know you like mathematics as much as I do, so here’s a more formal definition of it: a symmetry σ is a bijection on decision variables (or values, or both) such that if X is a solution, then σ(X) is also a solution with the same objective value.

There are different types of symmetries that affect finding multiple optimal solutions, but they may come from two sources: 🧮 formulation (your model structure creates artificial equivalences) or 🗃️ instance (the problem data itself has inherent symmetries).

🥵 The computational burden

Let’s start with one concrete example that considers two different scenarios for assigning workers to tasks.

Scenario A: 3 distinguished workers, 3 tasks. In this case, the assignment is easy to see as workers are easily distinguished, so the solver takes no time.

Scenario B: 3 identical workers, same 3 tasks. As the workers are all identical from a mathematical perspective, there exists 3! = 6 equivalent solutions.

When looking for solutions with branch-and-bound, the solver will waste time proving there’s nothing better in each branch. But since solutions are all equivalent, there’s not so much it can do to prune branches.

That’s why branch-and-bound gets trapped in symmetric subtrees: it explores many more branches than needed.

You’ll see the root node stalls, there are long plateaus in the gap, and that it may take exponentially larger times for problems that doesn’t seem too difficult at first sight.

Seen this in your own models? Share your story here:

🔢 Types of symmetries

Understanding symmetry types helps you choose the right breaking technique. Here's the practical classification:

🚚 Variable symmetries: you have multiple variables representing identical objects, like identical vehicles in routing, identical machines in scheduling, or identical workers -as we have seen- in assignments.

🎨 Value symmetries: your decision variables can take values that are functionally equivalent, like ‘red’ or ‘blue’ values in a coloring problem (the values per se don’t matter, just that they’re different). In general terms, these appear when you could rename values without changing problem meaning.

🔀 Variable + value symmetries: both your variables and your values can be swapped. For instance, in the N-Queens problem, where we need to place a queen in each row and column of a chessboard without attacking each other, if we model it like each row is a variable and the column is the value (where the queen is placed), what happens if we can rotate the entire board? Columns become rows and vice versa, so new rotated solutions satisfy all constraints but they’re semantically the same, just seen from another angle. Yet the solver doesn’t know that.

🫥 Hidden symmetries: your data looks different but creates equivalent solutions, like when you have workers with "different" hourly rates: [15.00€, 15.01€, 14.99€] (effectively identical), vehicles with tiny capacity differences: [100, 101, 99] units, or locations with nearly identical coordinates.

🎯 Conditional symmetries: symmetries that only exist under certain conditions. These are harder to break because they’re dynamic.

🧩 Partial symmetries: some objects are identical, others aren't, like when you have 5 vehicles where 3 are identical trucks, and 2 are different vans. You’ll need to apply different techniques to different groups.

🔬 Classical techniques to break symmetries

Now that we understand better what symmetries are in optimization problems, let’s see different techniques to breaking them.

Breaking symmetries sounds straightforward, just add some constraints, right? Not quite.

Studies indicate we need to be ⚠️ cautious ⚠️:

Complete symmetry breaking for certain combinations is an NP-hard problem, so we’d need to decompose them into simpler, weaker constraints reducing its propagation effectiveness, even though it's computationally easier to implement.This creates a practical dilemma:

→ Simple constraints = easier implementation, weaker propagation

→ Global constraints = stronger propagation, complex implementation

This tradeoff affects both solver performance and developer sanity.

Having said that, what strategies can we use to break symmetries without breaking our brains?

🧮 Classical symmetry-breaking techniques

There are four classical techniques we can use to break symmetries:

🔤 Lexicographic ordering

It forces solutions to be "lexicographically smallest" among all symmetric variants. It’s like alphabetical ordering, but for solutions.

In this case solution [1,3,2] comes before [2,1,3] as it’s forced to assign first 1 rather than 2, and solution [1,2,3] comes before [1,3,2]. This forces a canonical form.

👤 Representative constraints

It picks one representative from each symmetric group and add constraints to enforce it, fixing one variable per symmetry group.

For instance, you could always assign route 1 to truck 1, route 2 to truck 2, route 3 to truck 3…

This is easier to implement than lexicographic ordering, but requires confidence that the choice of representative is neutral.

➡️ Precedence constraints

It enforces hierarchical usage of resources, so if you use truck 2 then you must use truck 1.

It’s even easier to implement and gives good results with minimal overhead.

⚖️ Capacity-based breaking

It uses domain-specific information to create a natural ordering.

For example, you’d order vehicles by capacity, then cost; workers by skill levels, then availability; time slots by demand, then resource availability.

One good thing is that it uses business logic that practitioners already understand and it’s often robust.

🏁 Conclusions

Today, we laid the foundation for understanding symmetries in optimization problems.

You’ve seen why they matter, how they trap solvers, and four classic strategies to start breaking them right away.

In the second part, we’ll go further:

The most efficient modern techniques used in solvers today

How ML can predict symmetry patterns and help you choose constraints

And practical decision frameworks for when to apply, skip, or combine techniques

See you next week: same place, same problem-solving energy.

Let’s keep optimizing,

Borja.

Know someone who fights with slow solvers? Share Feasible with them: