📈 #64 Mind Evolution and the frontier of LLM-based optimization solvers

Exploring a novel approach to solving optimization problems without formal mathematical modeling.

Large Language Models (LLMs) like ChatGPT have transformed AI, pushing the boundaries of Natural Language Processing and reasoning.

However, their ability to solve complex planning and decision-making tasks remains restricted.

Extracting critical information from a text-based optimization problem and building a mathematical model or algorithm to solve it is very challenging.

A traditional solver is the clear choice for complex decision-making tasks. They offer optimality guarantees (where needed), are trusted in Operations Research, and have powered critical decision-making in various fields for decades.

What if the obstacle is structuring the problem into a mathematical model?

Many researchers are using LLMs to extract decision variables, constraints, and objective functions from problem descriptions to automate model formulation. This approach has significant practical limitations and is theoretically promising.

If extracting a MIP model from an LLM fails, is there another way forward? Could we build something that doesn’t require formal mathematical structuring, just operating at a language level?

Today in Feasible we’ll see:

💥 Why traditional methods have struggled

🧠 A novel approach from DeepMind and its uniqueness

🤖 Why it represents a fundamental shift in LLM-driven problem-solving

🎧 At the top, you can listen to a podcast-like version of this post!

Before we start... I recently spoke at the University of Delhi about how OR can drive real-world impact.

Ready? Let’s dive in… 🪂

💥 What was tried and why it failed

There’s a significant research area around solving optimization problems through LLMs, by generating algorithms or mathematical models using solvers.

It’s a hot topic with great potential to change how we -OR practitioners- work.

Several studies have connected AI and optimization by using LLMs to automate problem formalization.

We’ve seen studies on the limits of language models as planning formalizers from Drexel University and a team from Harvard, MIT, and IBM that sought to solve real-world planning with LLMs.

The typical process to automate our work involves (i) extracting decision variables, constraints, and objectives (LLMs analyze natural language descriptions and convert them into a model/algorithm), (ii) coding the model/algorithm, and (iii) solving it with a solver or running the algorithm.

This approach was expected to work as it’s the natural way we usually work. LLMs excel at extracting information from text and solvers are efficient with well-defined problems. Automating this process could reduce human intervention and make optimization tools more accessible.

As Richard Oberdieck highlighted in LLM-ify me, LLMs can’t be a solver since they hallucinate, so this approach is ineffective in practice:

📖 Extracting a model from text is harder than expected. Many real-world problems are loosely defined, making it difficult to extract precise decision variables, constraints, and objective functions. Moreover, LLMs can misinterpret them, leading to incomplete or incorrect problem definitions.

🎯 Solvers need perfectly defined models. If one constraint is missing or incorrectly formulated, they fail to find a valid solution. Some constraints and objectives require fuzzy logic or rewording to incorporate them into the model.

🐛 Coding errors. Generated code often requires manual debugging to run correctly in a solver. LLM-generated code is prone to errors when task complexity increases, such as in optimization problems.

What if there was a different approach? What if it’s not strictly necessary to translate text into a mathematical model? What if LLMs could act as a solver, getting solutions by manipulating them directly in the language space?

Let’s see Mind Evolution.

🧠 Mind Evolution: key contributions and highlights

While DeepMind explored generating heuristics with FunSearch, others proposed solutions to automatically develop algorithms for optimization problems using LLMs.

Like this NeurIPS 2024 paper or this IEEE Transactions on Evolutionary Computation one. (thanks Emilio for the references!)

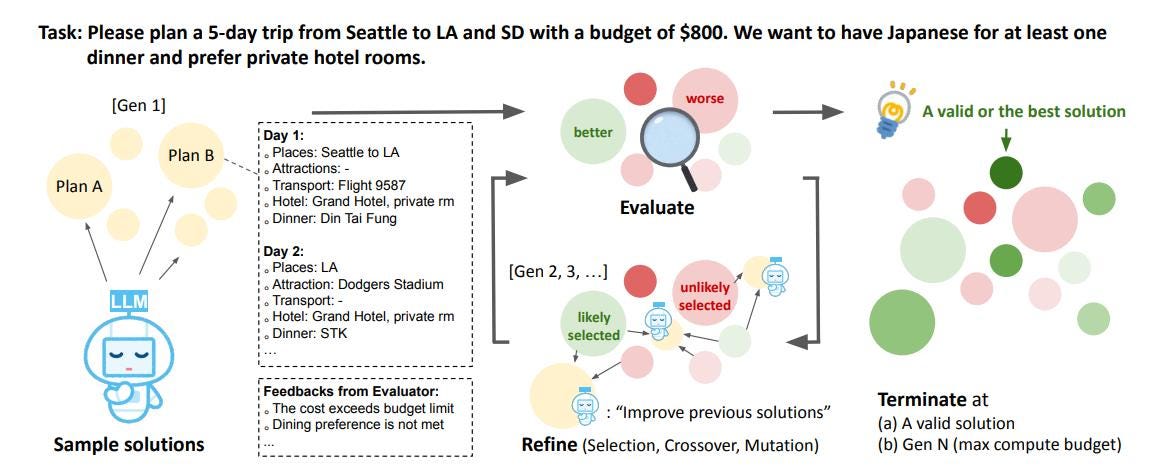

Mind Evolution’s idea is clear: don’t translate a problem in plain language to a solver/algorithm. It’s unreliable.

Instead, provide solutions to problems manipulating them through language. The novelty lies in not needing defined decision variables, constraints, and objective functions.

It uses a Genetic Algorithm-like style to balance exploration and exploitation while keeping a set of possible solutions rather than static sampling or a single-solution refinement.

They retain the ideas of Genetic Algorithms, such as selection (choosing the best solutions based on task-specific evaluators), crossover (combining high-performing solutions to generate improved candidates), and mutation (introducing variations to avoid stagnation and enhance exploration).

How can they evaluate solutions through a fitness function? According to a Tencent paper, there’s a method to refine and self-improve LLM responses based on feedback.

Mind Evolution evaluates solutions through textual feedback and heuristic scoring rather than mathematical formulations.

🤖 Why Mind Evolution is a shift

OR practitioners are trained to structure problems mathematically.

We might consider other options for optimization problems, especially the challenging ones with fuzzy logic or complex descriptions.

Let’s examine the trade-offs between traditional solvers and heuristic-based refinement approaches like Mind Evolution instead of stating that structured optimization is challenging.

1️⃣ When does a solver make sense?

🔹 Well-defined problems. If a problem can be fully encoded with precise variables and constraints, a solver can find optimal solutions efficiently.

🔹 Optimality is required. Some industries (e.g., finance, manufacturing) need provable guarantees that heuristics cannot provide.

2️⃣ When could Mind Evolution be the preferable choice?

🚀 When problem definition is ambiguous, an iterative language-based approach helps prevent premature formalization errors if decision variables or constraints are hard to extract explicitly.

🚀 When you lack a team of OR experts, Mind Evolution operates directly through language, enabling non-technical users to solve their problems and reducing the time to an automated solution.

Mind Evolution complements traditional solutions like solvers or algorithms, rather than replacing them. It offers a modern way to solve unstructured problems.

🏁 Conclusion

Traditional approaches to optimizing LLM outputs have limitations. Through heuristic sampling, structured solvers, or LLM-assisted problem formalization, they struggle with ambiguity, require exact formulations, and suffer from longer debugging times.

Mind Evolution represents a paradigm shift. By applying evolutionary search principles, it enables LLMs to refine solutions without explicit mathematical formalization. This makes it more adaptable, scalable, and user-friendly than previous approaches.

Mind Evolution embraces the fluid and evolving nature of real-world decision-making, rather than fitting every problem into a rigid framework. This makes it a promising technique for optimizing LLM-driven reasoning.

Let’s keep optimizing,

Borja.

PS: the paper! Here you have it: Evolving Deeper LLM Thinking.