📈 #63 Why VNS beats Genetic Algorithms in 90% of real optimization problems

And requires just one parameter to tune.

I began my Operations Research journey 11 years ago thanks to metaheuristics.

They are effective ways to get good quality solutions quickly and understand the inner details of optimization algorithms.

I see them playing a growing role in this field, but that’s a topic for another post.

Today I want to discuss two different metaheuristics.

They’re quite different, being diametrically opposed. One takes a population of solutions, the other just one. One is more stochastic, the other more deterministic. One relies on biologically-inspired knowledge, the other focuses on optimization from all angles.

Today I want to discuss Genetic Algorithms and Variable Neighborhood Search.

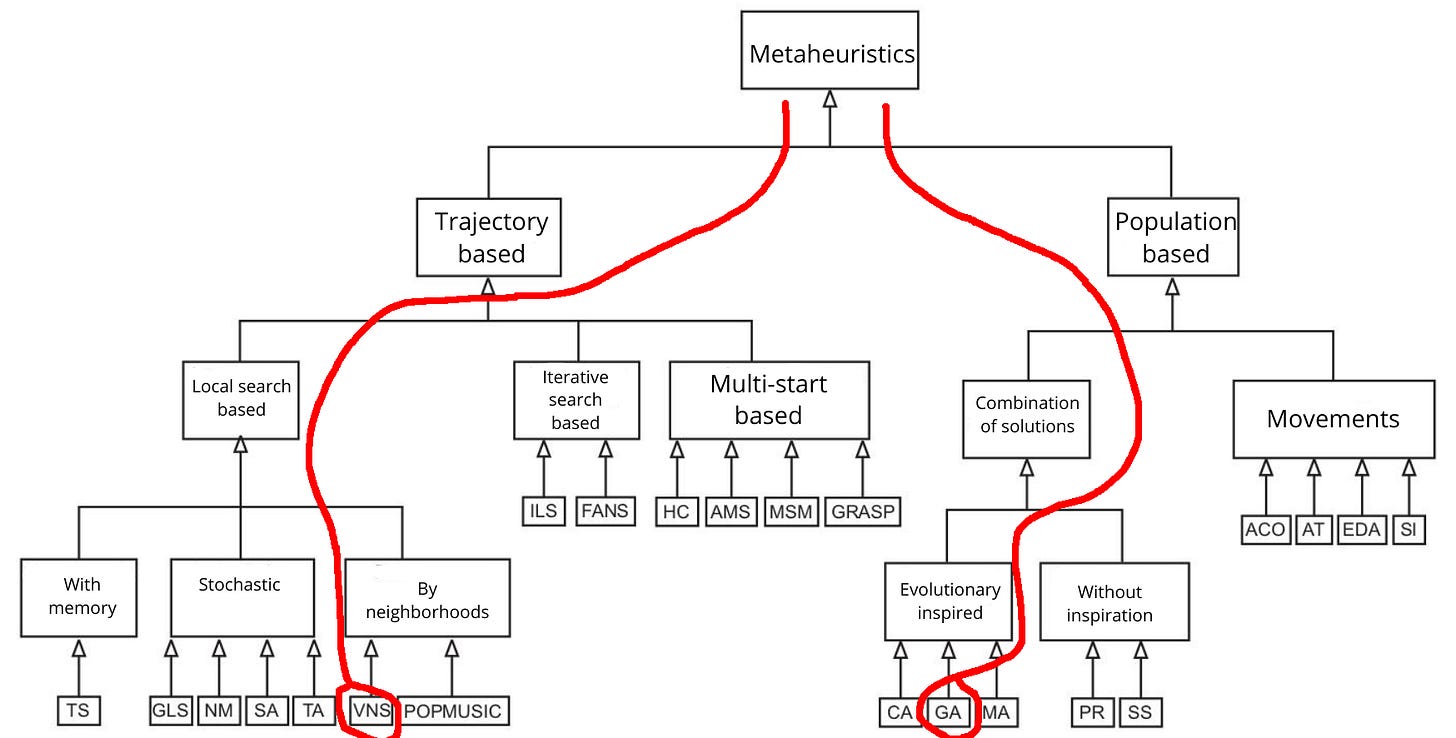

A taxonomy of metaheuristics shows their differences:

I can’t say I hate one (I’m not that kind of person), but I’m really fond of the other.

Today in Feasible we’ll see:

🧬 Understanding Genetic Algorithms

⚠️ The negative aspects of Genetic Algorithms

✅ Variable Neighborhood Search: a strong alternative

Before we start... Oskar Schneider featured me in a mini-interview!

Ready? Let’s dive in... 🪂

🧬 Understanding Genetic Algorithms

Genetic Algorithms are a branch of Evolutionary Algorithms, stemming from the neo-Darwinian idea of species evolution:

Well-adapted individuals have a higher chance of living longer and producing offspring that inherit their traits. In contrast, poorly adapted individuals have a lower survival probability, leading to fewer offspring and potential extinction.

Genetic Algorithms emerged in the 1960s through John Holland’s work at the University of Michigan, though previous research had similar ideas. The concept of recombining solutions was a key contribution. GAs mimicked selection, crossover, and mutation mechanisms.

Holland's groundbreaking idea was to apply natural selection principles to computational problem-solving. This led to his 1975 book "Adaptation in Natural and Artificial Systems." This bio-inspired approach captured the imagination of researchers and practitioners, spawning a field of evolutionary computation.

Their introduction offered a new perspective on solving optimization problems by exploring large search spaces in an uncharted way.

How do they work and what are their key contributions?

⚙️ How it works

The beauty of GAs lies in their mimicry of natural evolution:

Algorithm: Basic Genetic Algorithm

1. Initialize population P with random solutions

2. While (termination condition not met):

2.1 Evaluate fitness of all individuals

2.2 Select parents based on fitness

2.3 Create offspring through crossover

2.4 Apply mutation to maintain diversity

2.5 Replace old population with new generation

3. Return best solution foundGAs operate with three evolutionary mechanisms:

🎯 Selection: individuals (solutions) are chosen based on a fitness function. This ensures better-performing ones have a higher chance of passing their attributes to the next generation.

🔀 Crossover: selected individuals exchange parts of their structure to create new offspring, combining features from both parents to generate enhanced solutions.

🔄 Mutation: random alterations maintain population diversity, preventing early convergence on suboptimal solutions.

GAs are powerful.

They allow exploration of the search space (mutation) along with exploitation (crossover), and their population-based search enables straightforward parallel exploration.

📌 Key contributions

They were influential as early metaheuristics for optimization problems. Currently, I see three significant contributions:

💡 Innovative approach. GAs were among the first metaheuristics to propose a biological analogy. This inspired numerous subsequent algorithms and frameworks.

🔄 Versatility. Their general-purpose nature made them a favorite in academic research, allowing applications across diverse domains without significant problem-specific customization.

🧭 Exploration capabilities. The stochastic elements of GAs enable exploration of vast search spaces, often uncovering solutions that deterministic methods might miss.

GAs have achieved significant successes in various domains, but they come with trade-offs.

⚠️ The negative aspects of Genetic Algorithms

While GAs have significantly influenced metaheuristics development, they also have drawbacks, particularly in real-world applications.

Genetic Algorithms require meticulous calibration of parameters like mutation rates, crossover probabilities, selection mechanisms, and population size. This leads to a time-consuming and computationally expensive trial-and-error process. The time spent fine-tuning is not spent finding better solutions.

The core operators in GAs are designed to be universally applicable. This lack of problem-specific structure is beneficial, but it means they rarely exploit specific properties of the problem. As a result, they can miss critical opportunities to optimize based on domain-specific insights.

In high-dimensional and structured decision spaces, the performance of GAs can degrade significantly. This requires additional layers of algorithms like local searches.

They converge slowly to near-optimal solutions on many practical problems due to maintaining a large, diverse population in each iteration, using computational resources inefficiently.

And on top of everything, first you need to learn their jargon. Their evolutionary mechanisms are steps for diversifying and intensifying (or exploring-exploiting) the search space, like any other metaheuristic.

I can’t say I enjoy them.

I prefer simpler, optimization-focused metaheuristics like...

✅ Variable Neighborhood Search: a strong alternative

In 1997, Pierre Hansen and Nenad Mladenović developed VNS, which emerged from three straightforward observations:

A local optimum in one neighborhood doesn’t have to be so in another.

A global optimum is a local optimum with respect to all possible neighborhood structures.

For many problems, local optima with respect to one or several neighborhood structures are relatively near.

Instead of relying on random processes, VNS systematically explores the search space by switching between different neighborhood structures. This evolution represents a shift towards more systematic metaheuristics aligned with the problem’s structure.

I already wrote about this metaheuristic in Warehouses -Part II-: optimization problems for picking orders, so I won’t detail the specific implementation today.

I prefer to describe its workings and key contributions.

⚙️ How it works

The algorithm starts with an initial solution, which can be constructed randomly, with a greedy heuristic, or any method from 5 ways to build a solution for your optimization problem. Start with an improved solution by a local search that gives a local optimum.

The better the initial solution, the more likely it is to find a good final solution. Just apply a local search method to that initial solution for a good starting point.

If you remember the algorithm, VNS systematically explores neighborhoods. It first applies a perturbation move (Shake), then an improvement method (Improve), and finally decides whether to change the neighborhood. All of this is embedded in a double loop: one to count the time or iterations and the other the explored neighborhoods.

Thanks to this minimalistic and flexible structure, I see VNS as a framework rather than a recipe. Why?

You can define as many neighborhoods as necessary to exploit your problem knowledge.

You can reuse your neighborhoods in the Shake and Improve methods. The former allows randomness, while the latter allows deterministic moves.

You can have only the Shake procedure, only the Improve one, or both. You can implement a plain local search to improve solutions or a combination of local searches through your defined neighborhoods.

You can treat the Improve method as a way to run another metaheuristic inside, embed it into a multi-start approach to enhance its effectiveness, or parallelize the search to diversify or intensify solutions.

See definitions in Warehouses -Part III-: Unlock high-quality solutions for Order Picking optimization problems.

(Now I think I’ve already talked a lot about VNS.)

📌 Key contributions

I see VNS as a superior metaheuristic in many cases because:

🗺️ Its structured exploration of the search space. VNS leverages predefined neighborhood structures for a more directed search. This exploration is often more efficient in identifying high-quality solutions.

🎛️ Easy tuning. VNS provides clear control over the search process, making it easier to debug and reduce testing time when tuning parameters. You need to set the number of iterations or execution time!

🧩 Adaptability. VNS is particularly well-suited to problems where the structure can be explicitly exploited to enhance performance. Consider the many that fall under the umbrella of combinatorial optimization.

These contributed to VNS being my preferred metaheuristic during my PhD thesis. I love it, as it offers a pragmatic approach with key advantages.

🏁 Conclusion

Genetic Algorithms have made important contributions to optimization.

Their nature-inspired approach has advanced metaheuristics. However, the strengths that made GAs popular -versatility and generality- introduce challenges in real-world applications. Extensive tuning, lack of problem-specific structure, and computational inefficiencies make them less appealing for structured, high-stakes environments.

In contrast, Variable Neighborhood Search offers a practical alternative.

VNS provides better control, faster convergence, and scales more effectively to complex, real-world problems by incorporating problem-specific insights and systematically exploring the search space.

For OR practitioners aiming for tangible business results, the choice is clear. Practical considerations should drive algorithm selection rather than conceptual appeal. Simpler methods like VNS can outperform complex approaches, highlighting that theoretical elegance doesn't guarantee practical effectiveness.

Given its advantages, VNS should be the go-to metaheuristic for real-world optimization problems. Its systematic and problem-tailored approach consistently outperforms the more general and computationally intensive Genetic Algorithms, making it the better choice for practitioners seeking reliable and efficient solutions.

Genetic Algorithms still have their place, though.

Next week I’ll discuss an impressive application of Genetic Algorithms from a recent Google DeepMind paper overlooked by other AI news.

Until then...

Let’s keep optimizing,

Borja.

Great explanation, thanks! Agree on all your points about GAs. They're elegant for sure, but definitely cumbersome to truly get them to work. I know VNS but have never used it in practice. My go-to metaheuristic for highly structured problems is grammatical evolution, especially an EDA version we designed during my PhD that works like ✨ in program synthesis. We work on automated ML which is basically evolving ML pipelines, it's a very high dimensional and highly structured search space, because you have to pick which algorithms to use, in which order, and then hyperparameters, which can be other algorithms, so the search space is hierarchical. Also some changes are very non-local, suffice to say it's a hell of a search problem. So nothing based on changing solutions seems to work, and we had to resort to EDAs but there were no EDAs for such highly structured spaces, so we came up with a fairly straightforward way to turn grammatical evolution into an EDA using a probabilistic grammar and a bayesian learning framework to update production probabilities based on sampled pipeline' fitness. It was a really fun project to work on.

Great article and excellent contributions.