📈 #52 Operations Research reimagined (Part III): mindset

There exist a transparency issue, let's fix it!

Today’s post is sponsored by…

The DecisionOps platform helping optimization teams build more decision models instead of more decision tools.

👉 Do you want to sponsor a post in Feasible? Just reply to this email.

A paradox exists in analytical solutions: while Machine Learning and Operations Research aim to drive better decisions, they take radically different approaches to transparency.

OR holds a distinct advantage in terms of algorithmic clarity.

Our mathematical models state their objectives and constraints, providing a clear path to understanding solutions. Even in complex scenarios, the logic remains traceable—sometimes difficult to follow, but fundamentally comprehensible.

Contrast this with ML's "opaque" algorithms. Neural Networks and Transformers make decisions through complex layers that resist straightforward interpretation. While explainability tools exist, they require additional trust in their mechanisms.

However, when we examine organizational culture and knowledge-sharing practices, the situation reverses dramatically.

ML thrives in an open-source ecosystem, where leading tools are freely available and community-driven innovation is the norm. This transparency has accelerated adoption, with decision-makers comfortable implementing ML solutions.

OR remains bound to proprietary approaches. The mathematical clarity of our solutions is hidden behind closed software and proprietary implementations, limiting the field's accessibility and adoption.

📌 Why does this matter now?

This transparency gap isn't just an academic concern. It's shaping the future of business analytics:

🧐 Organizations are demanding explainable, accessible solutions

👩💻 The next generation of analysts and developers expect open tools and communities

💰 Companies are making multi-million dollar decisions between OR and ML approaches

📖 The rise of AI has made transparency essential for solution adoption

🤷♂️ The OR talent gap is widening while ML attracts new practitioners daily

Understanding this disconnect and its implications for OR's future is crucial.

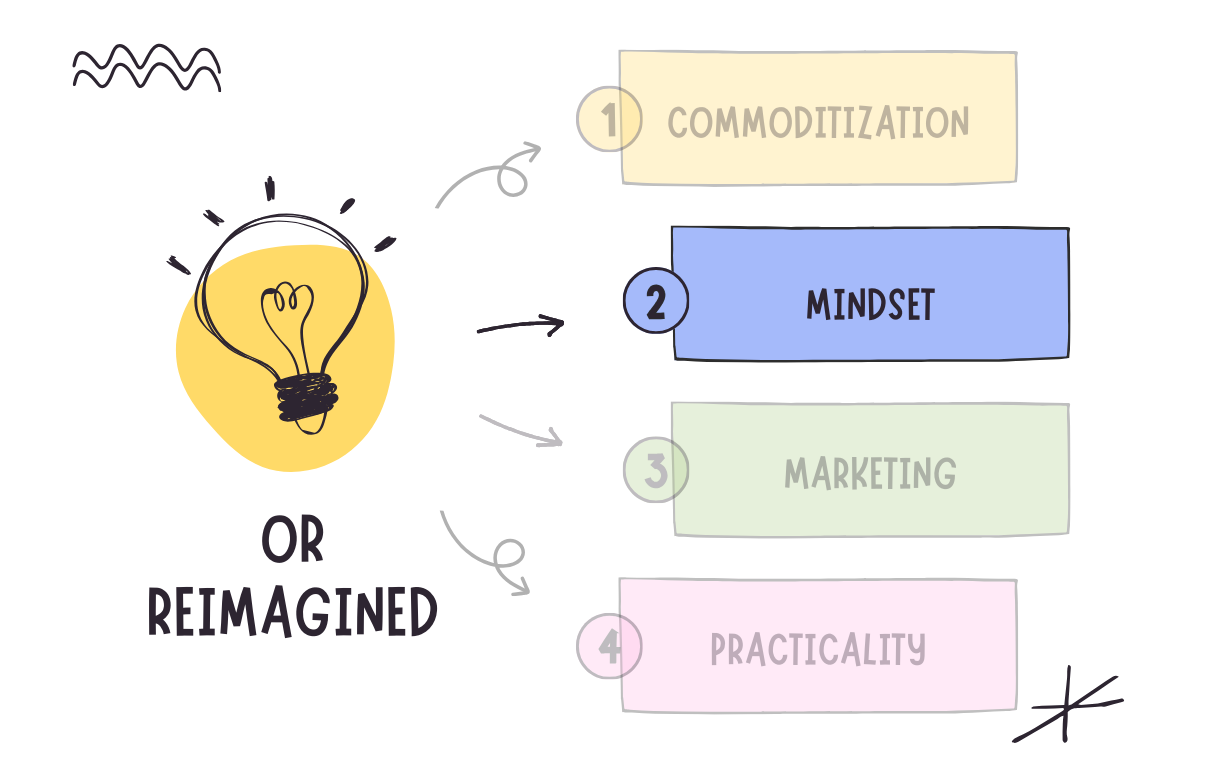

In today's Feasible edition, we'll explore:

🕵️♂️ The impact of OR's transparency issue

📜 How historical decisions shaped our closed ecosystem

💡 What we can learn from ML's transparent approach

This is the third post in a six-part series on the issues that have prevented OR from reaching its full potential and help you avoid these patterns. If you missed them, read Part I and Part II.

🎧 At the top, you can listen to a podcast-like version of this post!

→ Ready to dive into OR's transparency challenge? Let's get started! 🪂

🕵️♂️ The problem with the lack of transparency

Trust is fundamental to adopting any analytical solution.

In Operations Research, trust is critical because our solutions drive significant business decisions. Yet, our field often operates behind a veil of opacity that undermines this trust.

While OR prides itself on mathematical precision and optimal solutions, the path to these solutions often remains hidden.

Proprietary solvers obscure their algorithms behind simplified interfaces, creating a black box that decision-makers struggle to trust. This opacity creates a paradox: we offer precise, deterministic solutions but conceal the reasoning that makes them reliable.

An interesting contrast emerges when comparing OR and Machine Learning.

While ML algorithms are more opaque in their decision-making, they face less resistance in adoption. The key lies in expectation management.

ML practitioners acknowledge the probabilistic nature of their solutions and have developed robust tools for decision explanation. Meanwhile, OR's promise of deterministic optimality creates higher expectations for solution transparency.

This lack of transparency manifests in several critical ways:

↔️ Misalignment between model recommendations and organizational strategy

🙅♂️ Executive hesitation in implementing solutions they don't understand

🚧 Barriers in stakeholder communication and buy-in

🤝 Challenges in cross-functional collaboration

OR practitioners face a dual communication burden. They must translate business problems into mathematical models and explain complex solutions to stakeholders. This challenge becomes harder when tools and methods lack transparency.

Understanding how we arrived at this opacity is crucial for charting a path forward. The historical development of OR has created structural barriers to transparency, and recognizing these is the first step toward dismantling them.

How did we get here?

📜 Historical reasons

The foundations of modern OR's transparency challenges were laid during its golden age of software development (1980s-1990s). This period saw the emergence of powerful but closed systems that shaped the field's culture for decades.

Proprietary, enterprise-grade solutions dominated the landscape across multiple domains:

Optimization Solvers (CPLEX, FICO Xpress) are powerful and complex environments that set the standard for opaque solutions

Simulation Tools (SIMAN/Arena): Sophisticated but closed systems for process modeling

Decision Support Systems (Expert Choice) are proprietary frameworks with undisclosed methodologies

Supply Chain Solutions (i2 Technologies): Industry-specific tools with embedded OR techniques in opaque interfaces

Several interconnected factors reinforced this closed ecosystem:

💸 Economic realities: those companies had high development costs, so they needed to charge premium prices. Limited market competition enabled exclusive business models.

💾 Technological context: sharing software was harder then due to limited access to public repositories. It was possible but impractical. Computational constraints favored optimized, closed implementations, and hardware limitations made efficient proprietary solutions more viable. Given the high computational complexities of OR solutions, you need machines with i) good CPU and ii) good RAM. Those are expensive.

🔁 Academic vs. industry: both worlds have different incentive structures. Universities need to publish papers (and the more complicated, the better) while businesses prioritize practical implementation (and simple solutions are often better). Academic research incentives favored theoretical publications over open-source tools, while enterprise software models prioritized exclusive, high-margin solutions. All of this contributes to limited transfer mechanisms between academia and industry.

🏛️ Cultural evolution: OR professionals viewed methodological complexity as a competitive advantage. It uses highly mathematical notation that sets another entry barrier. Since each industry has its own optimization problems and almost every business has unique constraints, OR is a fragmented field, making generic-purpose software less appealing.

This historical development had lasting consequences. It delayed the democratization of OR tools, reduced cross-disciplinary collaboration, and slowed innovation compared to open fields.

While OR remained in its closed ecosystem, ML evolved differently.

Can we learn from Machine Learning and restart Operations Research to make it greater than ever?

💡 Learning from Machine Learning: a blueprint for transparency

Machine Learning's transparency-first approach offers valuable lessons for Operations Research.

While ML faces algorithmic opacity challenges, the field has cultivated a culture of openness that drives innovation and adoption.

I see three pillars of ML transparency.

🔓 Open knowledge ecosystem

The code-sharing culture with public repositories drives open research and a preprint culture for top-notch work on arXiv.

That opens a reproducible research environment with standard datasets and evaluation metrics.

Peer reviews go beyond traditional channels due to a community-driven process.

🤝 Democratized innovation

You can visit GitHub to find countless open-source repositories of tools (like PyTorch, TensorFlow, or scikit-learn) and educational resources (like free courses, tutorials, and documentation).

Those platforms offer a discussion forum, like GitHub Discussions, for users to discuss important development tasks. This fosters collaborative development.

🔍 Transparency technologies

Though you may struggle to understand why a model gave a particular solution, decision explanation tools like SHAP and LIME can help.

There are visualization tools like TensorBoard and Weights & Biases to visualize the training process and final weights application.

An ecosystem of tools like MLflow or Kubeflow helps manage the entire ML cycle, including tracking experiments, packaging code into reproducible runs, and sharing and deploying models.

→ The transformation of OR doesn't require abandoning its mathematical rigor or unique strengths. Instead, we can:

📏 Maintain precision while improving accessibility

🎓 Preserve expertise while broadening participation

🏭 Keep industrial applications while sharing general approaches

📜 Honor historical contributions while embracing modern practices

We can create a more vibrant, accessible, and innovative field by adopting ML's transparency practices while preserving OR's core strengths.

🏁 Some conclusions

The path forward is clear: Operations Research must embrace transparency to reach its full potential.

But this transformation promises more than openness. It offers a fundamental reimagining of how OR can drive business value.

Key benefits of a transparent OR ecosystem:

🚀 Accelerated innovation through open-source collaboration speeds development cycles and cross-pollinates ideas. This accelerates problem-solving through shared knowledge.

🌟 Talent development and attraction to lower barriers for new practitioners, with clear learning paths for aspiring OR professionals.

🤝 Enhanced trust and adoption with a clearer value proposition for decision-makers, better stakeholder understanding of solutions, and increased implementation confidence. This resulted in stronger alignment with modern business practices.

🏆 Competitive advantage with faster deployment of optimization solutions and greater adaptability to new tech stacks.

The transformation of OR is essential for the field's future.

Join the movement:

📖 Share your transparency success stories

💻 Contribute to open-source OR projects

📢 Join community discussions

🤍 Champion transparency in your organization

💡 Share this article with colleagues advocating for change

Let’s keep optimizing,

Borja.

How efficiently you actualize the value of optimization into a live business process matters just as much as the modeling tools and solvers you choose.

Nextmv is a DecisionOps platform that simplifies the path from research mode to real-world impact:

👟 Prototype and productionalize models faster with integrations (HiGHS, Pyomo, OR-Tools, Hexaly, AMPL, Gurobi, scikit-learn) and a common API layer

🧪 Innovate, iterate, and manage rollout risk with a suite of model testing tools and results visualizations

🙌 Manage team capacity and stakeholders by increasing transparency into model development to build confidence

👀 Leverage turnkey infrastructure and monitoring that scales with your needs and provides performance observability

Bring your custom model code to Nextmv or explore with a quickstart template.

Eagerly awaiting the rest of this series. Thanks!!!