📈 #51 Operations Research reimagined (Part II): commoditization

Let's explore how we can learn from ML to make OR more accessible

Today’s post is sponsored by…

Empowering data scientists and OR professionals with ready-made scheduling and routing optimization models through APIs.

👉 Do you want to sponsor a post in Feasible? Just reply to this email.

Operations Research (OR) can drive significant business value, yet it’s often seen as a niche, complex, and hard-to-apply discipline.

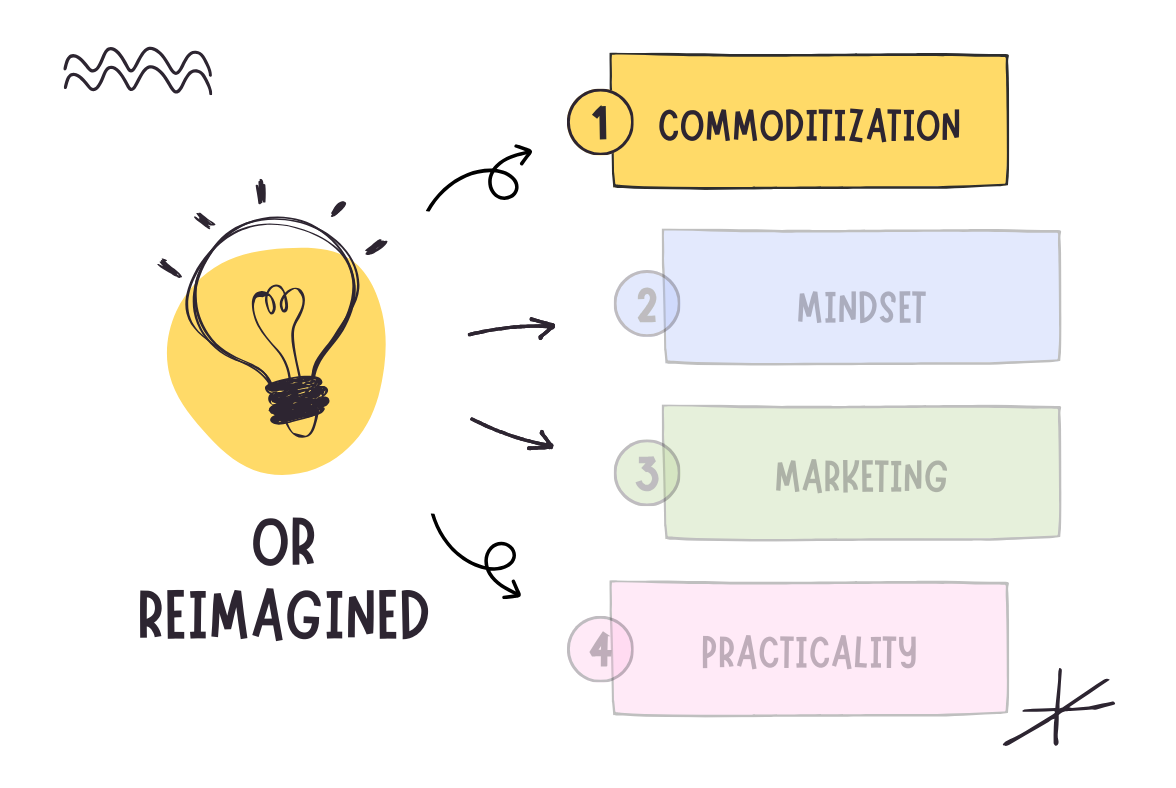

In this post, we’ll explore commoditization and its impact on Machine Learning (ML). We’ll also consider the key pathways for wider adoption and innovation in OR.

The main premise is that increasing the commoditization of OR - making its tools and techniques more accessible, user-friendly, and widely available - could have profound impacts.

It can spark innovation, drive broader adoption, and extend optimization reach across industries.

We can identify opportunities to bridge the "commoditization gap" in OR and unleash its potential by comparing the journeys of ML and OR.

That’s my plan for today in Feasible:

🤖 See the main factors of ML commoditization

⚖️ Compare ML and OR to understand the commoditization gap in OR

🚀 Suggest pathways to OR commoditization for everyone’s benefit

Remember this is the second post out of a six-part series where I discuss the issues that have kept OR from reaching its full potential and help you avoid these patterns.

🎧 Remember too you can listen to a podcast-like of this post. It’s at the top of the post!

Ready? → Let’s get started! 🪂

🤖 Key drivers of ML commoditization

Several factors have accelerated ML commoditization, including:

High market demand,

strong open-source communities,

and user-friendly interfaces and simplified workflows.

📈 High market demand

Businesses need predictive analytics across industries, driving market demand and funding.

With lots of data, there’s an increasing reliance on data-driven decision-making. Analytics serve the need to predict the future for businesses, letting them make better decisions.

Remember the negative feedback loop OR is in? ML isn’t, and the “limited adoption” part of that loop is the opposite, generating a positive feedback in all stages.

💪🏻 Strong open-source communities

The open-source ML community has democratized tools and created a huge support network, making it easy for newcomers to learn and experiment.

ML has good tools that became the standard for several areas.

Want to apply ML easily? Go to scikit-learn.

Want to explore Deep Learning? Go to TensorFlow or PyTorch.

Want to explain the results more easily? Use SHAP.

This open-source mind has widened its reach and fostered Python development. It is easy to create an environment with Conda in just a couple of minutes. Creating pictures with your data is easy with Plotly.

🤗 User-friendly interfaces and simplified workflows

This has created tools that allow anyone without prior knowledge to start working.

A non-technical user can view their data with Tableau, Power BI, or Google Looker Studio. There’s an ecosystem where anyone can manage an entire pipeline with AutoML without needing to understand the details.

This has lowered entry barriers and expanded the pool of potential ML practitioners.

The ML ecosystem has succeeded in creating abstraction layers:

ML Layers:

High-level (AutoML) → Mid-level (sklearn) → Low-level (PyTorch) → Mathematical Foundation

OR Layers:

Mathematical Foundation → Low-level (Solvers) → [Missing Middle Layer] → [Missing High Level]But in OR, where did those middle and high levels of abstraction go?

⚖️ The OR commoditization gap

We can look at other highly commoditized fields like ML to understand the gap in OR solutions development.

Let’s look at a simplified ML project pipeline with the following stages:

Data collection, preprocessing, and feature engineering: using databases to store and clean the relevant data, and create the main features for the problem.

Problem definition: selecting the model architecture, loss function, and hyperparameters to tune.

Solution method selection and training: select the model and train it on data.

Deployment: putting the model into production using MLOps tools, APIs, etc.

Monitoring and maintenance: create alerts and automated retraining for model evolution while the model is deployed.

You can easily translate all those steps into OR with minor changes, such as:

Data collection and preprocessing: same as in ML, but no need for feature engineering.

Problem definition: In OR, you define the objective function, constraints, decision variables, etc. of your optimization problem. Therefore, you need a mathematical background to translate business needs into a math problem.

Solution method selection and training: In this step, you select the solver and its parameters to get the best results in the shortest execution time. There’s no training in OR, but you need to adjust parameters and parts of the model if it doesn’t work as expected.

Deployment has the same definition as in ML.

Monitoring and maintenance have the same definition as in ML, but there is no need to re-train anything. Instead, adapt the algorithms/models to business changes.

Are there tools for every step in ML, and are they available in the OR field?

Let’s see it in a table to compare them and see the OR gap, considering the following legend:

⭐ (Very Low): Almost completely custom work required

⭐⭐ (Low): Some standard tools but mostly custom

⭐⭐⭐ (Medium): A mix of standard and custom elements

⭐⭐⭐⭐ (High): Mostly standardized with some customization

⭐⭐⭐⭐⭐ (Very High): Almost fully standardized and automated

In ML, everything is standardized with minor customization. This doesn’t mean practitioners don’t work, but that they have tools that simplify their tasks.

OR lacks those tools in crucial parts of the flow. I do not consider the last step for this comparison, as it is mainly attached to business KPIs and rules, and there is not much room there. However, I see big differences in 2 stages: problem definition and deployment.

I can explain why, or at least some reasons, but the post would be lengthy. So, I decided to discuss the challenges of problem definition and deployment in a separate one. The fundamental difference lies in problem structure:

The OR problem formulation is more custom than the standardized patterns (classification, regression) in ML.

Integrating OR solutions into production environments poses challenges, like allocating specific hardware for a problem since optimization problem-solving time isn’t usually linear.

Commoditization isn't impossible in those stages; it requires a different approach.

🚀 Pathways to OR commoditization

Commoditization matters because:

💡 Spark innovation.

🌐 Drive broader adoption.

📈 Extend optimization reach across industries.

Increasing commoditization in OR could have profound impacts.

Wider adoption would allow more businesses, especially small and medium enterprises (SMEs), to benefit from optimization, a powerful but underutilized tool. This democratization would drive innovation and foster a new generation of OR practitioners who could apply techniques creatively across domains.

Ideas to bridge the commoditization gap in OR:

Develop standardized modeling frameworks for common OR problem types. Just as ML has standard tasks (classification, regression), OR should develop standard patterns for common problem types (vehicle routing, resource allocation, production scheduling) to reduce custom modeling needs. Customizations should be allowed since every business operates differently and some constraints or objective functions apply to some but not others.

Encouraging an active open-source OR community: An active open-source OR community could accelerate tool development and support, similar to ML.

Investing in education and practical tools to lower the entry barrier: Providing industry-specific education, practical toolkits, and accessible interfaces aligns with the previous two points.

Pursuing cloud-native OR solutions to simplify development: Building cloud-native OR solutions with accessible APIs and integration options simplifies deployment and makes OR more responsive to real-world needs.

I’d like to see OR focus on creating better abstraction layers that allow users to start with high-level, industry-specific interfaces and gradually dive deeper into mathematical modeling when needed.

🏁 Some conclusions

Increasing OR commoditization is crucial for wider adoption.

The core issue isn't that OR can't be commoditized. The fundamental nature of OR requires different approaches to commoditization than ML.

Lessons learned from ML can provide valuable insights for the OR community. By focusing on standardization, abstraction, and integration, we can make it more accessible while maintaining its power and flexibility.

The goal isn't to oversimplify OR but to create appropriate abstraction layers that make it accessible while preserving the ability to handle complex, custom problems.

Let's work together to make OR a more commoditized, widely adopted, and innovative field, empowering organizations of all sizes to harness optimization for success.

Let’s keep optimizing,

Borja.

What if you could skip the lengthy process of building optimization models and get straight to running impactful tests on real-world data?

Timefold.ai enables data scientists and OR professionals to deliver fast, reliable results for projects involving complex field service routing and workforce scheduling challenges.

✔️ Access ready-made optimization models via APIs, and integrate advanced scheduling and routing capabilities easily.

✔️ Remove the guesswork and pressure of building robust, real-world-ready models from scratch.

✔️ Deliver optimization expertise in record time that your team and clients will trust and value.

Try our ready-made models today and see how Timefold can enhance your practice, equipping you to deliver smarter, faster results in scheduling and routing optimization.