📈 #48 Gurobi vs PDLP: The CPU Showdown with SolverArena

Analyzing CPU performance of PDLP and Gurobi using SolverArena as the comparison framework

A good friend mentioned wanting to test PDLP a few weeks ago.

I talked about the new Google solver in The shortest email ever thanks to Google Research, and in my recent post about bridging the gap between traditional solvers and the demands of large-scale optimization.

I don’t want to spoil anything, but he said he wanted to share his findings.

He initially considered of posting on LinkedIn, but I felt it wasn’t the best platform for this detailed analysis. So, I offered him the opportunity to write a guest piece here on Feasible instead. 🎉

Today, we have a special guest: Javier Berga.

I met Javier at Trucksters. Now he’s an optimization consultant at Decide4AI, where he uses advanced Operations Research techniques to tackle complex challenges.

He excels at translating advanced methodologies into practical solutions that impact business resources and processes. 💡

And today, he’s going to do just that!

I’ll leave you with him. ✨

In every project I’ve worked on, I’ve consistently faced the challenge of balancing two key factors:

💰 The cost of the tools we use, and

⚡ the performance they deliver.

Each client varies significantly in size, budget, and operational needs, making this balance a unique consideration for every case.

A critical aspect of this balance is choosing the right solver.

Before diving into any major development, especially when uncertainties exist, it’s essential to run a proof of concept. This involves testing a prototype model on project-relevant instances to identify the limitations of different solvers and shape a clear path forward.

A few weeks ago, I found myself immersed in one of those tests, comparing two very interesting solvers: Gurobi versus HiGHS' implementation of PDLP, both using CPU.

While designing and building the experiment, I had a déjà vu.

Why?

Solver comparison is a very common activity in our work for various reasons:

✨ A new solver emerges

🆕 An existing solver releases a new feature

🆚 We compare multiple solvers

🔧 We tune the parameters of a solver

And this got me thinking: Wouldn't it be fantastic to have a tool that facilitates these comparisons quickly and easily?

That's how SolverArena 🥊 was born.

⚖️ SolverArena: Easy-peasy comparisons

SolverArena is an open-source library I designed with the goal of simplifying and speeding up performance comparisons between different solvers in optimization problems.

The core idea behind this project is to provide a unified interface that abstracts the differences between solvers and facilitates their comparison.

Additionally, the library is already available on PyPI, making it easy for the community to install and use.

🎯 Benefits of SolverArena

Fast and efficient comparisons: By centralizing the execution of different solvers into a single script, SolverArena allows for quicker comparisons, eliminating the need to manually switch between different tools or adapt the model's code for each solver.

Automation: SolverArena is designed with automation in mind. Users can run tests on multiple solvers and get structured results ready for analysis, saving time during the experimentation phase.

Extensibility: While the library currently supports popular solvers like Gurobi and PDLP (HiGHS), my goal is to continue expanding support for other solvers, both commercial and open-source, to make SolverArena a more versatile platform.

📌 Key Features

Solver Abstraction: SolverArena allows users to work with multiple solvers without needing to modify the model's code for each one. The focus is on efficient comparison, not on implementing each solver individually.

Flexible Input: Users can provide:

A list of MPS file paths, the standard format for optimization problems.

A list of solvers, from which they can select the ones they wish to compare.

Optionally, a dictionary of custom parameters for each solver, allowing users to fine-tune specific details and get the most out of each tool.

⚔️ PDLP vs Gurobi

Going back to the tests…

When it comes to optimization solvers, Gurobi has long been considered the industry standard. But in my recent tests, PDLP gave Gurobi a serious run for its money - literally.

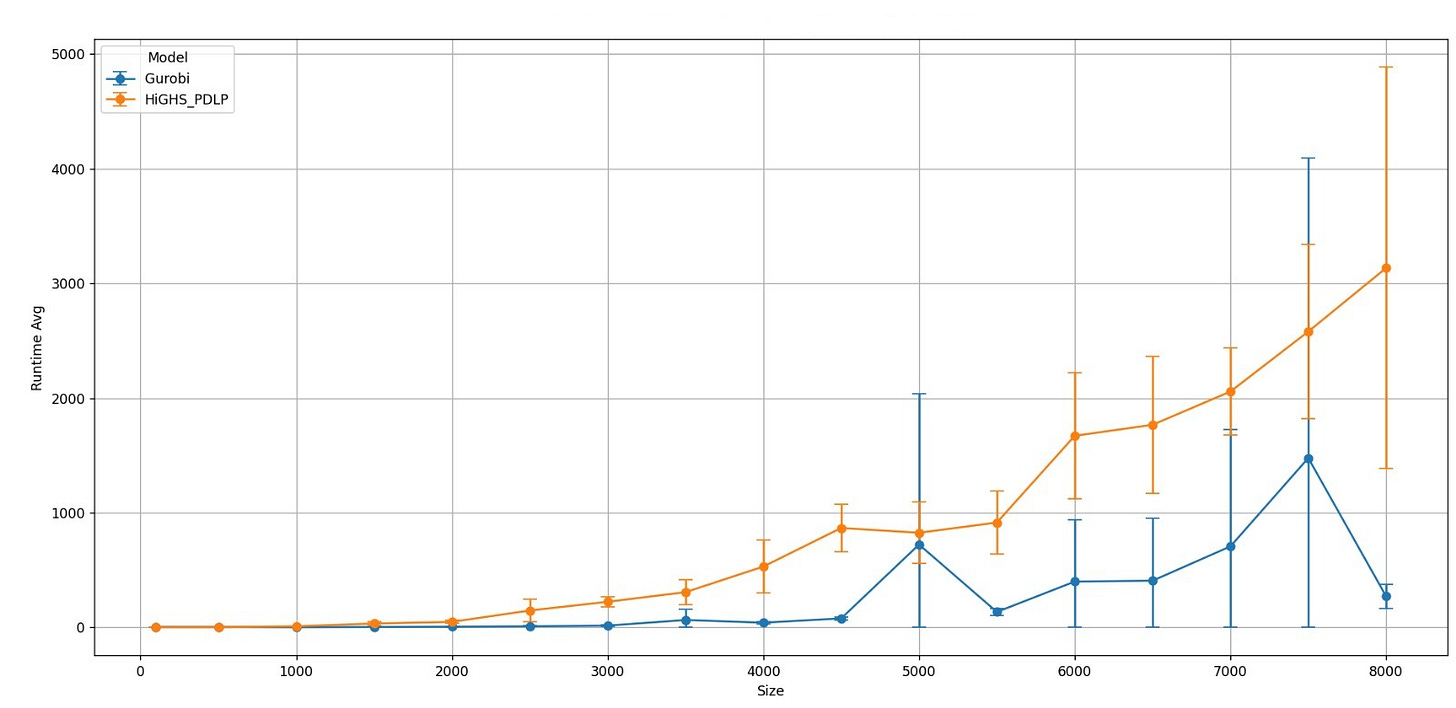

The results were quite revealing.

🏆 Results

Consistency in small and medium-sized problems: As expected, Gurobi consistently performed well on smaller instances, solving all of them extremely quickly with very little variability between runs. However, what stood out was that PDLP, despite being an open-source option, managed to solve these instances in comparable times with minimal standard deviation.

Scalability in large problems: As the instance sizes grew, I noticed that PDLP maintained surprisingly robust performance. While Gurobi was still faster overall, PDLP was able to solve very large instances in times that, although higher, remained within reasonable margins, demonstrating its ability to handle large-scale problems.

Time difference: Although Gurobi outperformed PDLP in absolute time across all instances, the difference between the two solvers wasn’t as significant as I expected, especially on medium-sized instances. This suggests that PDLP could be a viable option for large problems, where Gurobi's licensing costs may not always be justifiable.

PDLP is an algorithm that promises a significant boost in performance, especially due to its ability to leverage GPU processing.

Currently, HiGHS is developing its PDLP implementation for GPU execution.

Once available, I intend to repeat this experiment, which will allow for a precise measurement of the performance difference between CPU and GPU execution, assessing the impact that hardware parallelization can offer in solving large-scale optimization problems.

🔬 Experiment Methodology

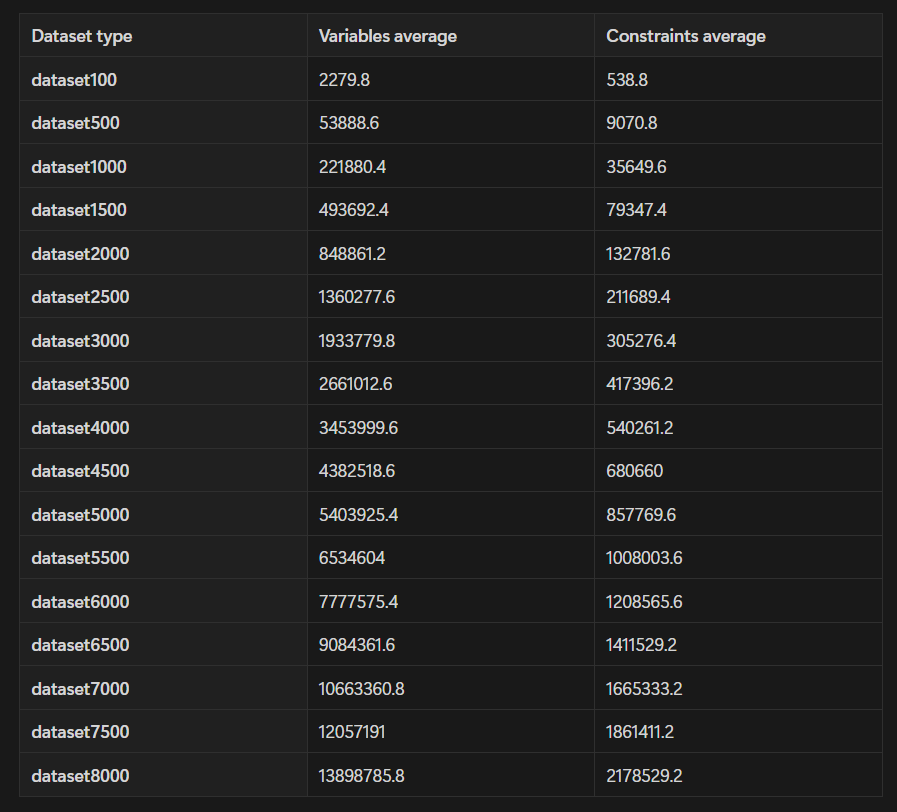

To evaluate performance, I used a problem involving the assignment of groups of people to tables at an event. I relaxed the model to apply PDLP.

Each instance generates a number of groups with random sizes, as well as a number of tables with a random number of seats. The total number of seats matches the total number of attendees, and the goal is not to split groups attending the event across different tables.

Each instance is generated using an integer input parameter representing the total number of people attending the event.

Instances: Five instances were generated for each problem size, allowing me to obtain a representative sample for each level of complexity.

Solving Time: For each problem, I set a time limit of 7200 seconds (2 hours).

Evaluated Solvers: The solvers I compared were Gurobi, an industry standard, and PDLP from HiGHS, an open-source solution.

📏 Metrics

To evaluate and compare the performance of different solvers, it is essential to consider not only the average solution time but also how these times vary based on the complexity of the instances and the consistency of the results.

For this reason, I aimed to consider the following metrics:

Solution time: I calculated the average time each solver took to solve each group of instances, and I also considered the standard deviation to measure the consistency of performance.

Scalability: I observed how each solver handled the increase in problem complexity as instance sizes grew.

Robustness: I looked at the varying times each solver required for problems of the same size.

🚀 Next steps

The development of SolverArena continues, and these are the next steps I have in mind:

Support for new formats: Currently, SolverArena accepts

.mpsfiles, but I plan to expand compatibility to other formats used in optimization problems, such as LP, OPL, and others. This will provide users with more flexibility when working with different types of models.Improving parameter handling: One of the key points for efficient optimization is the proper tuning of solver parameters. I will work on better integration for parameter handling, making it easier for users to automatically adjust solver parameters without complications.

Adding new solvers: I will continue to add support for more solvers. Besides those already included, I am exploring the addition of solvers like CPLEX, GLPK, and other emerging solvers. This way, users will be able to compare performance across a wider range of tools.

For those interested in experimenting or contributing to the project, the repository is already available on GitHub here.

Your feedback and contributions will be key to taking SolverArena to the next level.

I’d like to take this opportunity to thank you, as a reader, for supporting spaces like Feasible, and Borja Menéndez for the amazing work he does in promoting content on Operations Research.

Thank you!

Javier.